|

|

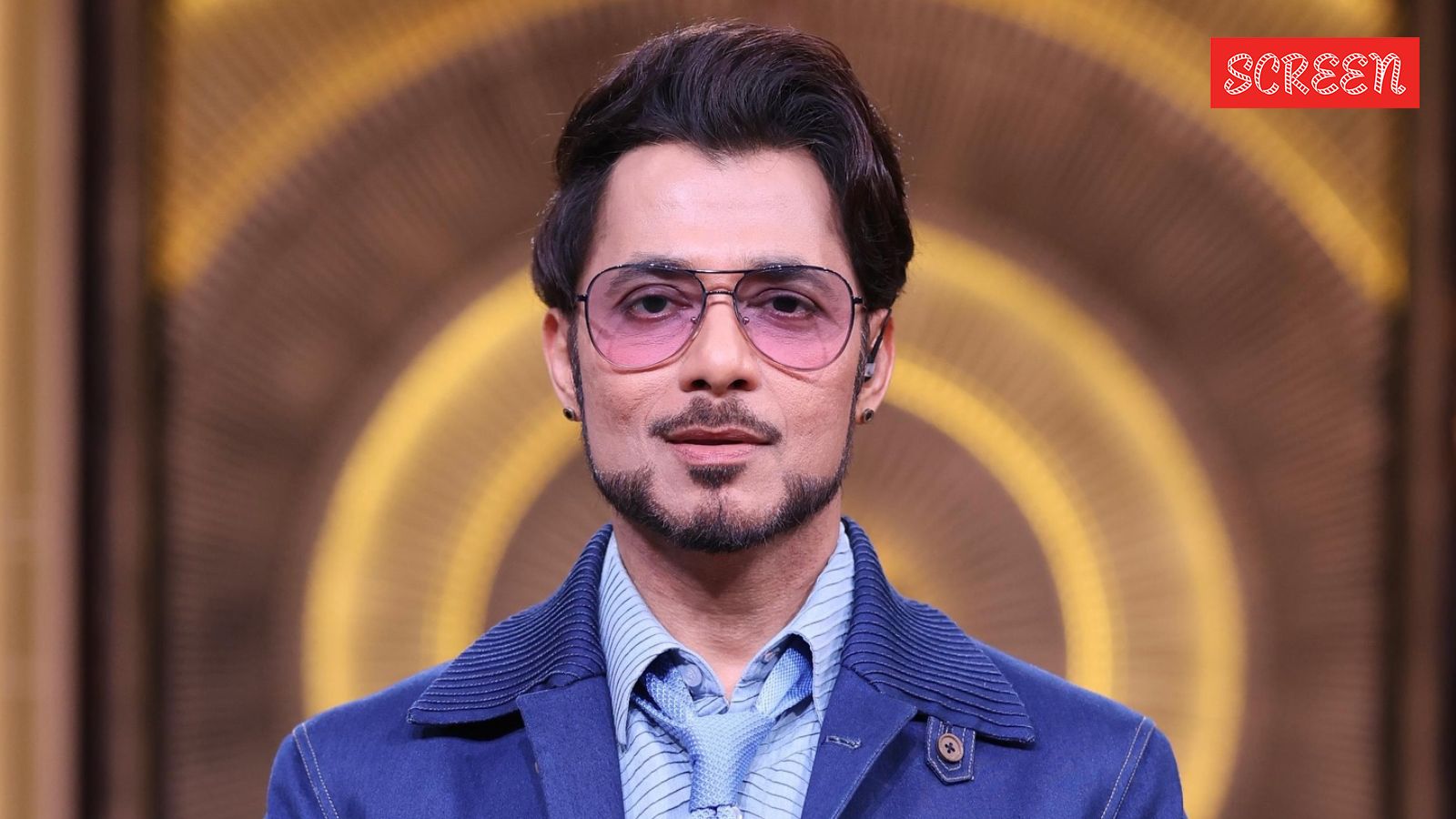

The recent controversy surrounding Ranveer Allahbadia’s comments on Samay Raina’s show, India’s Got Latent, has ignited a fierce debate about freedom of speech, accountability, and the role of platforms like YouTube in moderating content. The uproar, marked by multiple FIRs and varied public reactions, has now drawn the attention of Anupam Mittal, a prominent figure from Shark Tank India. Mittal's intervention, articulated in a detailed LinkedIn post, doesn't explicitly take sides but raises pertinent questions about shared responsibility, particularly implicating YouTube in the unfolding situation. He argues that while Allahbadia's comments were indeed reprehensible, the very nature of the show, India’s Got Latent, was predicated on irreverence, vulgarity, and shock value. This assertion aims to contextualize the controversy, suggesting that the problematic comments were not entirely out of sync with the show's overall ethos. However, this perspective is fraught with complexities. Does the established nature of a show mitigate the severity of offensive comments made within it? Should YouTube, as the platform hosting the content, bear a greater responsibility for the kind of discourse it allows? These questions demand careful consideration, especially in an era where online content wields significant influence and can have tangible real-world consequences.

Mittal’s call to summon YouTube to the High Court alongside Ranveer Allahbadia and Apoorva reflects a growing concern about the accountability of digital platforms. It underscores the argument that these platforms are not merely neutral conduits for content but active participants in shaping the digital landscape. By profiting from content, they also inherit a degree of responsibility for its impact. While the principle of free speech is sacrosanct in a democratic society, it is not absolute. The law typically recognizes limitations on speech that incites violence, promotes hatred, or defames individuals. The challenge lies in defining the boundaries of acceptable speech, especially within the context of entertainment. Shows like India’s Got Latent often push the envelope, blurring the lines between humor, satire, and outright offensiveness. Determining when such content crosses the line into harmful speech requires nuanced judgment and a thorough understanding of the context. The argument that the show's inherent nature justifies the comments is a slippery slope. It could potentially be used to excuse a wide range of offensive statements under the guise of artistic expression or comedic license. Therefore, it is crucial to strike a balance between protecting freedom of expression and preventing the spread of harmful content. This balance requires not only legal frameworks but also ethical considerations from creators, platforms, and viewers alike.

The debate surrounding India’s Got Latent also raises questions about the changing landscape of comedy and entertainment. What was once considered edgy or unconventional may now be deemed offensive or insensitive. This shift reflects evolving social norms and a growing awareness of issues such as sexism, racism, and other forms of discrimination. Comedians and entertainers are increasingly being held accountable for the impact of their words, and there is a growing expectation that they should be mindful of the potential harm they can cause. This does not mean that comedy should be sanitized or that comedians should be afraid to take risks. However, it does mean that they should be aware of the power of their platform and the potential consequences of their words. The controversy surrounding India’s Got Latent also highlights the importance of media literacy. Viewers should be equipped with the critical thinking skills to evaluate content and discern between satire, humor, and outright offensiveness. They should also be aware of the potential impact of online content and the importance of reporting harmful or inappropriate material. Furthermore, the incident shines a light on the challenges of content moderation on platforms like YouTube. With billions of hours of video uploaded every day, it is virtually impossible for human moderators to review every piece of content. As a result, platforms rely on algorithms and user reporting to identify potentially problematic content. However, these systems are not foolproof, and they can often miss subtle forms of hate speech or incitement to violence. The need for more effective content moderation mechanisms is therefore paramount, not just to comply with legal requirements but also to foster a more responsible and ethical online environment.

Anupam Mittal's stance further necessitates a deep dive into the complexities of platform liability. Should YouTube, or any similar platform, be held responsible for the content generated by its users? The prevailing legal framework often shields platforms from liability for user-generated content, relying on the principle that they are merely intermediaries. However, this principle is increasingly being challenged, particularly in cases where platforms are actively involved in promoting or profiting from harmful content. Mittal's suggestion to summon YouTube to the High Court reflects a growing sentiment that platforms should bear greater responsibility for the content they host, especially when that content is potentially illegal or harmful. This argument aligns with the broader movement towards greater platform accountability, which seeks to hold social media companies and other online platforms responsible for the spread of misinformation, hate speech, and other forms of harmful content. Critics of this approach argue that holding platforms liable for user-generated content could stifle free speech and innovation. They contend that platforms would be forced to censor content to avoid potential lawsuits, which could lead to a chilling effect on expression. However, proponents of platform accountability argue that the benefits of preventing the spread of harmful content outweigh the potential costs to free speech. They argue that platforms have a moral and ethical responsibility to protect their users from harm, and that they should be held accountable when they fail to do so. The debate over platform liability is likely to continue in the coming years, as policymakers and regulators grapple with the challenges of governing the digital age. Finding a balance between protecting free speech and preventing the spread of harmful content will be crucial to ensuring that the internet remains a valuable resource for information, communication, and entertainment.

The incident involving Ranveer Allahbadia and India's Got Latent also underscores the importance of fostering a culture of respect and inclusivity online. The internet can be a powerful tool for connecting people from different backgrounds and perspectives. However, it can also be a breeding ground for hate speech, harassment, and other forms of online abuse. Creating a more positive and inclusive online environment requires a multi-faceted approach that involves education, awareness-raising, and effective enforcement mechanisms. Education and awareness-raising can help people to understand the impact of their words and actions online, and to develop empathy for others. Effective enforcement mechanisms can deter harmful behavior and hold perpetrators accountable for their actions. In addition to these measures, it is also important to promote positive role models and examples of respectful and inclusive online communication. By showcasing the benefits of creating a more positive online environment, we can encourage others to adopt similar behaviors. Ultimately, creating a more respectful and inclusive online environment requires a collective effort from individuals, platforms, and policymakers. By working together, we can create a digital world that is safer, more welcoming, and more conducive to free expression and open dialogue. Anupam Mittal's perspective acts as a catalyst for a much broader and deeply necessary discussion about the ever-blurring lines between entertainment, responsibility, and the pervasive influence of online platforms. The path forward requires careful consideration, thoughtful dialogue, and a commitment to fostering a digital ecosystem that prioritizes both freedom of expression and the well-being of its users. The future of content moderation, platform accountability, and ethical online engagement hinges on the active participation of all stakeholders in navigating this complex terrain.

The issue of censorship also rears its head in this debate. While many agree that hate speech and content inciting violence should be removed, the line becomes blurry when dealing with satire, parody, or controversial opinions. Who decides what is acceptable and what is not? The risk of over-censorship is real, potentially stifling creativity and free expression. Furthermore, relying solely on algorithms to moderate content can lead to biases and unintended consequences. For example, algorithms may disproportionately flag content from marginalized communities or misinterpret cultural nuances. Therefore, human oversight is crucial in content moderation, ensuring that decisions are made with context and sensitivity. However, even with human oversight, there is always the potential for subjective interpretation and bias. This highlights the need for transparency and accountability in content moderation processes. Platforms should be clear about their content policies and provide users with a clear process for appealing decisions. They should also be transparent about the algorithms they use and the data they collect. By promoting transparency and accountability, platforms can build trust with their users and ensure that content moderation decisions are fair and consistent. The debate over censorship also raises questions about the role of government regulation. Some argue that governments should play a more active role in regulating online content, setting standards and enforcing them. However, others fear that government regulation could lead to political censorship and undermine free expression. Finding the right balance between government regulation and platform self-regulation is a complex challenge. It requires careful consideration of the potential benefits and risks of each approach. Ultimately, the goal should be to create a regulatory framework that promotes responsible online behavior without stifling creativity and free expression. The debate surrounding India's Got Latent also underscores the importance of fostering a culture of critical thinking and media literacy. In an age of information overload, it is more important than ever for people to be able to evaluate content critically and discern between fact and fiction. Media literacy education can help people to develop the skills they need to identify misinformation, propaganda, and other forms of manipulation. It can also help them to understand the biases that can influence online content and to form their own informed opinions. By fostering a culture of critical thinking and media literacy, we can empower people to navigate the digital world safely and responsibly. Ultimately, the solution to the challenges posed by online content lies not in censorship or regulation, but in education, awareness-raising, and the promotion of responsible online behavior. By empowering individuals to make informed choices and to engage with content critically, we can create a more positive and inclusive online environment.

Beyond the immediate controversy, the broader issue is the increasingly intertwined relationship between entertainment, social commentary, and potential harm. Shows that deliberately court controversy, pushing boundaries and testing limits, often operate in a gray area where the line between humor and offense is subjective and constantly shifting. This necessitates a more proactive approach from both content creators and platforms in anticipating and mitigating potential negative consequences. Content creators should engage in greater self-reflection and consider the potential impact of their words on different audiences. They should be willing to take responsibility for their actions and apologize if they cross the line. Platforms, on the other hand, should invest in more sophisticated content moderation tools and processes. They should also be more transparent about their content policies and provide users with clear channels for reporting harmful content. In addition to these technical and procedural measures, there is also a need for a broader cultural shift in how we consume and engage with online content. We need to be more mindful of the potential impact of our words and actions online, and to hold ourselves and others accountable for creating a more positive and inclusive online environment. This requires a collective effort from individuals, platforms, policymakers, and educators. By working together, we can create a digital world that is safer, more respectful, and more conducive to free expression and open dialogue. The Anupam Mittal commentary adds a crucial dimension to this already intricate discussion. By explicitly naming YouTube alongside the individuals involved, he forces a confrontation with the realities of platform responsibility. This necessitates a re-evaluation of the legal and ethical standards governing online content, potentially paving the way for greater accountability and a more responsible digital landscape. Ultimately, the goal is to strike a delicate balance between protecting freedom of expression and preventing the spread of harmful content. This requires a nuanced approach that takes into account the context, the intent, and the potential impact of online content. It also requires a commitment to ongoing dialogue and collaboration between all stakeholders. By working together, we can create a digital world that is both vibrant and responsible, where creativity and innovation can flourish without sacrificing the well-being of individuals and communities.